How Cultural Bias Influences AI: From Smiling Samurai to Glamorous Aid Workers

By Liu Liu – LIC Board Member

Have you ever thought “My culture is the best“? It’s natural! In the study of culture, this is called ethnocentric, it’s natural. It means “having or based on the idea that your group or culture is better or important than others”.

However, this cultural bias can influence AI in surprising ways. But How Cultural Bias Influences AI? You might ask. Let’s use AI image generation as an example to explore how.

Imagine searching for “cultural diversity” images using AI. You might expect a global mix of people. But what if the results are mostly young people in modern Western clothes? This reflects a bias in the AI’s training data.

In another experiment, AI was asked to generate some group photos of the historical people. The first one is the samurai warriors of Japan. Look at them, what a lovely bunch! Considering the fact that they were either about to go into or just came back from bloody battles, they looked a bit too happy. Don’t you think? In the same experiment, this is the AI-generated American Indian. All happy and smiley as well.

How far from the truth are these AI-generated photos? In the real archive photos of Japanese samurai warriors and American Indians. All of the AI-generated group photos in this experiment have this so-called “American smile” because this is how the American interpretation of what facial expression people ought to have when having their group photo taken.

.png/:/rs=w:1280)

Image from https://medium.com/@socialcreature/ai-and-the-american-smile-76d23a0fbfaf

In 2015 Kuba Krys, a researcher at the Polish Academy of Sciences, studied the reactions of more than 5,000 people from 44 cultures to a series of photographs of smiling and unsmiling men and women of different races. Their study shows that different cultures interpret smiling very differently. In some countries, smiling can be interpreted as friendly, and confident; and in other cultures, it can be seen as dishonest and not taking things seriously, especially in public places and important events. But if the AI dataset is selected and trained by people from cultures who see smiles as positive, then you will have such images where everyone smiles.

Another example: AI-generated images of humanitarian workers resemble Indiana Jones and Lara Croft – glamorous and charming. In reality, aid workers come from diverse backgrounds and may not look like action movie stars.

.png/:/cr=t:0%25,l:0%25,w:100%25,h:100%25/rs=w:1280)

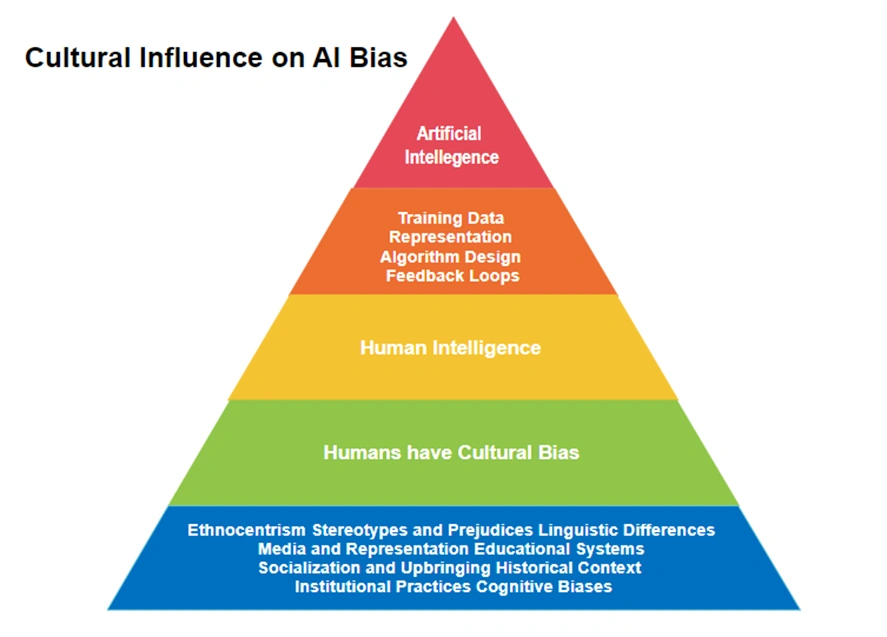

So, how can AI be “intelligent” yet biased? The answer lies in the word “artificial”. There are two meanings to the word “artificial”. The first meaning is “made or produced by human beings rather than occurring naturally, especially as a copy of something natural”. The second meaning is “(of a person or their behavior) insincere or affected”. Put into the context of AI, this means AI is produced by and affected by humans and reflects or amplifies the cultural and other types of biases humans have.

To reduce such cultural bias influence on AI, we need to first work on the cultural bias we have as humans, taking practical steps to continue to improve the AI systems to make them less culturally biased and more fair.

Here are some useful steps to take.

● Be aware of our own biases. We all have them!

● Use diverse and representative data. Train AI on a global range of cultures.

● Test for bias. Regularly audit AI systems to identify and remove bias.

● Build transparency. Explain how AI works and why it makes certain decisions.

● Assemble inclusive teams. Developers from diverse backgrounds can create fairer AI.

● Develop ethical guidelines. Ensure AI is used responsibly and avoids discrimination.

● Seek input from diverse stakeholders. Get feedback from a wide range of cultures.

Ultimately, we want to create an AI where every culture is equally and fairly represented. We can create AI that celebrates cultural diversity and promotes inclusion by working together.